SYNTHETIC PROPAGANDA

Last updated: May 28, 2025

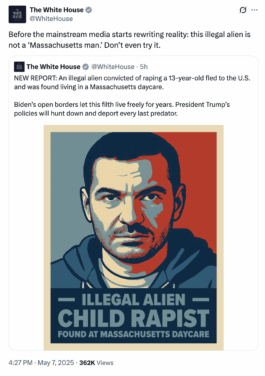

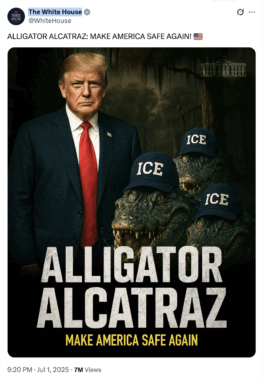

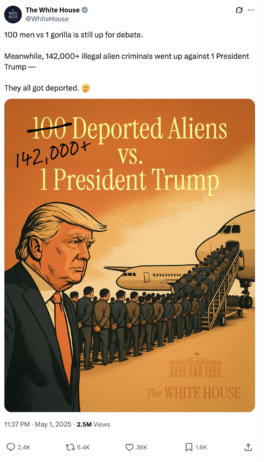

Social media communication of government agencies should ideally be truthful and impartial to sustain public trust in government and support democratic goals (DePaula & Hansson, 2025). This is obviously not the case with the current social media communication strategies demonstrated by the White House and others, who produce yet unseen transgressive content violating long agreed on set of rules and terms of official protocol.

Government communication in the US 2025 which at times resembles Internet trolling strategies utilizes generative AI tools, trending filters and memetic narratives such as the Ghibli filter and ASMR. I am collecting empirical evidence, literature and some first observations here in order to turn them into a more structured and formal form at some point. This is an ongoing investigation.

Preliminary Research

The dissemination of propaganda has always been closely linked to technological developments (Klincewicz et al., 2025). Generative artificial intelligence (GenAI) has given rise to new forms of propaganda (Olanipekun, 2025; Saab, 2024). This synthetic propaganda, drawing on Jowett and O'Donnell (2018), refers to the deliberate, systematic attempt to shape perceptions and influence the understanding of events in line with the interests of the sender(s) through GenAI-generated content. Synthetic propaganda surpasses traditional forms of propaganda in measurable dimensions—scope, reach, and speed (Fox, 2020)—and produces convincing disinformation (Spitale et al., 2023). While ethical debates around propaganda have always been complex (Cunningham, 1992), the use of generative AI technologies—as seen in the controversial Gaza AI video by President Trump (Holmes and Owen, 2025) and pro-AfD posts on TikTok (Schultheis, 2025)—introduces new ethical challenges (Bergman et al., 2024; Gabriel, 2020). These go beyond the mere spread of false information and include issues of intermediary responsibility and appropriate countermeasures.

What characteristics does it display? How is it used by different actors? And to what extent does it violate a) platform-specific GenAI guidelines, b) regulations such as the EU AI Act (Smuha, 2024), and c) GenAI principles (Laine et al., 2025)?

Initial findings reveal a discrepancy between ethical standards and their practical implementation. Although the majority of analyzed content violates existing guidelines, these are rarely enforced. The ethical challenges in dealing with synthetic propaganda demand a broad interdisciplinary discussion. This requires greater knowledge of the digital forms of manipulation.

Data

The White House / x

The White House / Insta

realdonaldtrump / Insta

“That’s not me that did it, I have no idea where it came from. Maybe it was AI. But I know nothing about it. I just saw it last evening."

But this weekend, Trump began sharing AI slop on a level we’ve not seen before (404 Media)

Literature

Bergman, S., Marchal, N., Mellor, J., et al. (2024). STELA: A community-centred approach to norm elicitation for AI align- ment. Scientific Reports, 14, 6616. https://doi.org/10.1038/ s41598-024-56648-4.

Cunningham, S. B. (1992). Sorting out the ethics of propaganda. Communication Studies, 43(4), 233-245.

DePaula, N., & Hansson, S. (2025). Politicization of Government Social Media Communication: A Linguistic Framework and Case Study. Social Media+ Society, 11(2), 20563051251333486.

Fox, J. (2020). ‘Fake news’–the perfect storm: historical perspectives. Historical Research, 93(259), 172-187.

Gabriel, I. (2020). Artificial intelligence, values, and alignment. Minds and machines, 30(3), 411-437.

Holmes, O., & Owen, P. (2025, February 26). Trump faces Truth Social backlash over AI video of Gaza with topless Netanyahu and bearded bellydancers. The Guardian.

Jowett, G. S., & O'donnell, V. (2018). Propaganda & persuasion. Sage publications.

Klincewicz, M., Alfano, M., & Fard, A. E. (2025). Slopaganda: The interaction between propaganda and generative AI. arXiv preprint arXiv:2503.01560.

Laine, J., Minkkinen, M., & Mäntymäki, M. (2025). Understanding the Ethics of Generative AI: Established and New Ethical Principles. Communications of the Association for Information Systems, 56(1), 7.

Muñoz, Katja, and Maria Pericàs Riera. “The Influence Evolution.” DGAP Policy Brief 13 (2025). German Council on Foreign Relations. May 2025. https://doi.org/10.60823/DGAP-25-42264-en

Olanipekun, S. O. (2025). Computational propaganda and misinformation: AI technologies as tools of media manipulation.

Saab, B. (2024). Manufacturing deceit: How generative AI supercharges information manipulation. National Endowment for Democracy, International Forum for Democratic Studies.

Sandelowski, M. (1995). Sample size in qualitative research. Research in nursing & health, 18(2), 179-183.

Schultheis, E. (2025, 20. Februar). How Germany’s far right is harnessing AI to win votes. POLITICO.

Silva-Atencio, G. (2025). The challenges and opportunities for ethics in generative artificial intelligence in the digital age. Dyna, 92(236), 26.

Spitale, G., Biller-Andorno, N., & Germani, F. (2023). AI model GPT-3 (dis) informs us better than humans. Science Advances, 9(26), eadh1850.

Smuha, N. A. Regulation 2024/1689 of the Eur. Parl. & Council of June 13, 2024 (Eu Artificial Intelligence Act). International Legal Materials, 1-148.

Links

Roland Meyer - Generative KI und die Ästhetik des digitalen Faschismus (re:publica 25, May 2025)

The AI Disinfo Hub (EU Disinfo Lab, May 2025)

Understanding the Role of GenAI in Elections: A Crucial Endeavor for 2024 (Tum Think Tank, May 2025)

In his slop era (altrightedelete, May 12, 2025)

The AI Slop Presidency (404 Media, May 7, 2025)

Cruel Communications (Extreme Measures, May 6, 2025)

Trump criticised after posting AI image of himself as Pope (BBC, May 4, 2025)

The Trump administration is trolling all of us (Independent, April 30, 2025)

Die Ästhetik des digitalen Faschismus (FAZ, 27.4.2024)

‘Trump Gaza’ AI video intended as political satire, says creator (Guardian. March 6, 2025)

Donald Trump’s A.I. Propaganda (New Yorker, March 5, 2025)

TRUMP PLEADS IGNORANCE AFTER SHARING AI-GENERATED TAYLOR SWIFT IMAGES (Rolling Stone, August 22, 2024)

Trump’s crafty new use of AI (Politico, August, 22, 2024)

Trump supporters target black voters with faked AI images (BBC, March 4, 2024)